AMD’s Rise Signals Fresh Competition in AI Chips. Startups Are in the Game Too.

Plus, a dispatch from Anthropic's Claude Café in New York

The Week in Short

Lisa Su’s AMD takes on Nvidia. West Village pop-up charms with “IRL” AI. New Carta data shows investors evenly split on key strategy. Two $2 billion AI rounds dominate VC funding. OpenAI wants to build its own app store. Robots rev up, but not the humanoid, Tesla Optimus kind. Polymarket & the New York Stock Exchange marry gambling & investing.

The Main Item

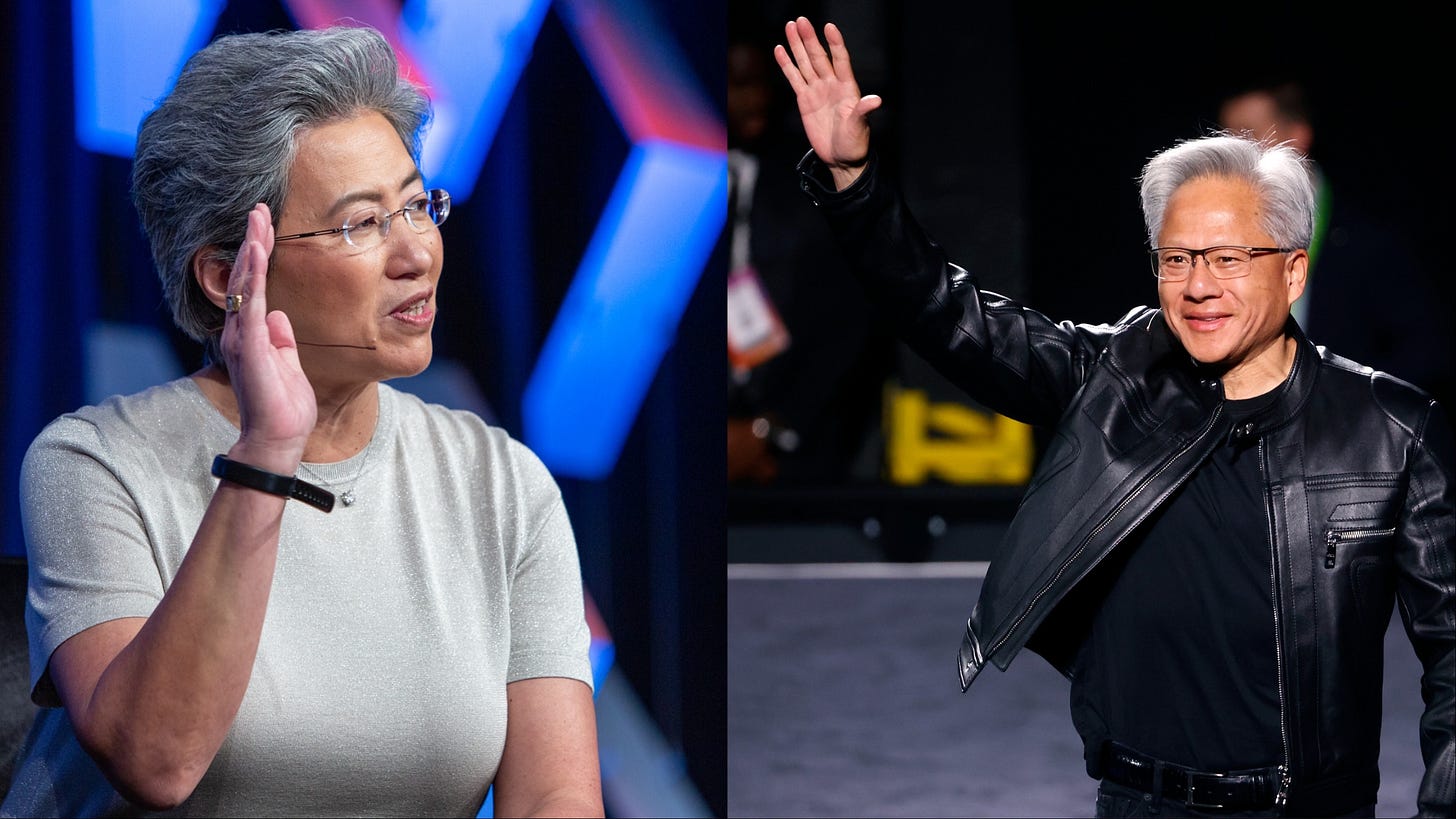

Lisa Su vs. Jensen Huang: An Underrated Rivalry

Nvidia CEO Jensen Huang has executed many remarkable tricks since he founded Nvidia 30 years ago, the latest being to position himself, alongside OpenAI’s Sam Altman, as a rock-star leader of the AI revolution. His trademark leather jacket and rousing arena performances have gone a long way in vaulting Nvidia to the apex of the industry and an astonishing $4.7 trillion market cap.

AMD’s Lisa Su is a study in contrasts: a woman in an industry that’s especially male dominated, lacking in flash, and focused on execution rather than hype. She’s the steady, methodical counterpart to the manic Huang, the tortoise to his hare in the AI chip race.

Along with Google, Broadcom and a handful of startups, she’s poised to make competition in the AI chip business a lot more heated than it is today.

Nvidia, of course, remains far ahead of the pack, and on the face of it AMD is sitting in the same distant-number-two position that it occupied during the PC era. Under the colorful Jerry Sanders, one of the original leaders of the Silicon Valley chip industry, AMD beginning in the 1980s was a designated “second-source” supplier for the Intel-designed x86 chips that powered PCs.

Amid waves of litigation, AMD later cloned the functionality of the Intel chips with its own designs. Yet no matter how good its chips — and AMD at times leapfrogged Intel — it was playing in its rivals’ sandbox. If you wanted to make a Windows PC you were obliged to use an x86 processor, and Intel controlled the upgrade cycle, so it always had the upper hand.

The AI industry doesn’t have the same kind of design lock-in. Nvidia GPUs might be the best choice for training LLMs, but they are not the only choice. There’s AMD, and there are also Google’s Tensor processors, which the search giant uses extensively to train its own AI. Worries about OpenAI experimenting with Google TPUs is part of what prompted Nvidia’s recent investment in Altman’s juggernaut.

Billions in New Funds for Chip Startups

Amazon, Apple and Meta are also designing their own AI silicon, and OpenAI last month announced it is doing the same with Broadcom as a partner.

The mounting competition helps explain Nvidia’s extremely aggressive strategy of investing in many of its customers. The revenue round-tripping can amount to Nvidia essentially buying its own products, but the logic is clear: if there are lots of data centers out there full of Nvidia chips, that’s an incentive for AI developers to choose Nvidia, creating whatever lock-in can be had.

All of that puts AMD in a much more interesting position than it was in the PC era, even if it is still the distant number 2; it’s not limited by dependence on an architecture controlled by someone else.

That’s also the reason for VC bullishness on AI chip startups such as Cerebras, which just raised a fresh $1.1 billion at an $8 billion valuation for its large-wafer training system and is planning to re-up its delayed IPO; Groq, which raised $750 million at a $6.9 billion valuation last month; and Tenstorrent, which raised $700 million at the end of last year at a $2.6 billion valuation.

Huang still has plenty of weapons at his disposal — including fat profit margins that give him a lot of room to maneuver financially. With demand for its latest chips outstripping supply, Nvidia has wisely chosen not to squeeze out every dollar, lest it alienate customers in the long run. But it still has plenty of margin to fight on price if it needs to.

That would bring its own problems: Nvidia’s extraordinary profits are by themselves responsible for a lot of the retail investor enthusiasm for AI. It’s easy to see even a hint of margin pressure bringing a sell-off in Nvidia’s sky-high shares — they’re worth more than ten times what they were at the beginning of 2023 — with untold ripple effects.

Intel Outside

Yet robust competition on the chip side would be good for the industry, if not for Nvidia. Equally important is multiple providers on the chip production side, though for now that’s a more distant prospect. All of the aforementioned companies — even Intel itself for some products — rely on TSMC to make their chips.

Even leaving aside the national security problem of the American AI economy relying on a single Taiwanese company as its linchpin, it’s certainly not a healthy state of affairs for so much to depend on a single vendor, no matter how exceptionally capable it has proven as a partner over the years.

Which brings us back to Intel. However ham-handed, President Trump’s efforts to revive the company as a national champion that can produce silicon with the best of them has a good rationale in this moment. There would be poetic justice too in Intel, once the world’s only brand-name chip company, resurrecting itself as the manufacturing partner for Lisa Su’s AMD.

IRL

AI Comes to the Consumer Pop-Up

Tech workers who’ve been waiting for their influencer moment got a chance last week in New York, courtesy of Anthropic.

The AI lab teamed up with Air Mail, the former Vanity Fair power broker Graydon Carter’s coffee shop/gift store in the West Village, and put on a limited-time “Claude Café” event. We waited in line with dozens of AI enthusiasts to snag a “thinking cap” baseball hat and drink a latté in the small space.

After Meta’s Vibes and OpenAI’s Sora 2 launch, Anthropic is clearly positioning itself as the “anti-slop” AI alternative and the thoughtful consumer’s choice.

Its recent video marketing spots offer a veneer of early Apple commercials, with “Think Different” now simplified to just “Keep Thinking,” leaving the alternative archly unstated. OpenAI’s ties to Microsoft also help with the differentiation of the cooler, scrappier upstart versus a dominant monolith.

Based on excitement and online impressions, the marketing team should pat themselves on the back. The event even attracted some out-of-towners — at least one attendee posted on X that he flew into New York from Charlotte to come to the shop and snag a hat. Adweek reported more than 5,000 people visited the café during its run.

Yet Anthropic’s core customer base is still mostly enterprise users, while OpenAI is running away with the consumer AI app business.

It’s unclear if the Claude marketing campaign is the beginning of a big effort to break out of that box, or simply a way to gain some general brand recognition outside of its coding assistants. Either way, other AI brands are seeing the appeal of IRL events: Cursor is hosting its own café meetup in New York on October 21.

One Big Chart

VC Funds Split on Concentrated Bets vs. Spreading It Around

Some VCs preach concentration as the key to success, with a small number of high-conviction bets. Others say making a lot of plays is crucial for raising the odds of a mega-hit. New data from Carta suggests that both camps have similar numbers of followers, and even small funds are sometimes surprisingly diversified.